- import javax.jms.JMSException;

- import javax.jms.Message;

- import javax.jms.MessageListener;

- import javax.jms.TextMessage;

- public class ConsumerMessageListener implements MessageListener {

- public void onMessage(Message message) {

- //这里我们知道生产者发送的就是一个纯文本消息,所以这里可以直接进行强制转换,或者直接把onMessage方法的参数改成Message的子类TextMessage

- TextMessage textMsg = (TextMessage) message;

- System.out.println("接收到一个纯文本消息。");

- try {

- System.out.println("消息内容是:" + textMsg.getText());

- } catch (JMSException e) {

- e.printStackTrace();

- }

- }

- }

阿里云产品博客 » 图片服务架构演进

现在几乎任何一个网站、Web App以及移动APP等应用都需要有图片展示的功能,对于图片功能从下至上都是很重要的。必须要具有前瞻性的规划好图片服务器,图片的上传和下载速度至关重要,当然这并不是说一上来就搞很NB的架构,至少具备一定扩展性和稳定性。虽然各种架构设计都有,在这里我只是谈谈我的一些个人想法。

对于图片服务器来说IO无疑是消耗资源最为严重的,对于web应用来说需要将图片服务器做一定的分离,否则很可能因为图片服务器的IO负载导致应用崩溃。因此尤其对于大型网站和应用来说,非常有必要将图片服务器和应用服务器分离,构建独立的图片服务器集群,构建独立的图片服务器其主要优势:

1)分担Web服务器的I/O负载-将耗费资源的图片服务分离出来,提高服务器的性能和稳定性。

2)能够专门对图片服务器进行优化-为图片服务设置有针对性的缓存方案,减少带宽网络成本,提高访问速度。

3)提高网站的可扩展性-通过增加图片服务器,提高图片服务吞吐能力。

从传统互联网的web1.0,历经web2.0时代以及发展到现在的web3.0,随着图片存储规模的增加,图片服务器的架构也在逐渐发生变化,以下主要论述三个阶段的图片服务器架构演进。

初始阶段

在介绍初始阶段的早期的小型图片服务器架构之前,首先让我们了解一下NFS技术,NFS是Network File System的缩写,即网络文件系统。NFS是由Sun开发并发展起来的一项用于在不同机器,不同操作系统之间通过网络互相分享各自的文件。NFS server也可以看作是一个FILE SERVER,用于在UNIX类系统之间共享文件,可以轻松的挂载(mount)到一个目录上,操作起来就像本地文件一样的方便。

如果不想在每台图片服务器同步所有图片,那么NFS是最简单的文件共享方式。NFS是个分布式的客户机/服务器文件系统,NFS的实质在于用户间计算机的共享,用户可以联结到共享计算机并象访问本地硬盘一样访问共享计算机上的文件。具体实现思路是:

1)所有前端web服务器都通过nfs挂载3台图片服务器export出来的目录,以接收web服务器写入的图片。然后[图片1]服务器挂载另外两台图片服务器的export目录到本地给apache对外提供访问。

2) 用户上传图片

用户通过Internet访问页面提交上传请求post到web服务器,web服务器处理完图片后由web服务器拷贝到对应的mount本地目录。

3)用户访问图片

用户访问图片时,通过[图片1]这台图片服务器来读取相应mount目录里边的图片。

以上架构存在的问题:

1)性能:现有结构过度依赖nfs,当图片服务器的nfs服务器有问题时,可能影响到前端web服务器。NFS的问题主要是锁的问题. 很容易造成死锁, 只有硬件重启才能解决。尤其当图片达到一定的量级后,nfs会有严重的性能问题。

2)高可用:对外提供下载的图片服务器只有一台,容易出现单点故障。

3) 扩展性:图片服务器之间的依赖过多,而且横向扩展余地不够。

4) 存储:web服务器上传热点不可控,造成现有图片服务器空间占用不均衡。

5) 安全性:nfs方式对于拥有web服务器的密码的人来说,可以随意修改nfs里边的内容,安全级别不高。

当然图片服务器的图片同步可以不采用NFS,也可以采用ftp或rsync,采用ftp这样的话每个图片服务器就都保存一份图片的副本,也起到了备份的作用。但是缺点是将图片ftp到服务器比较耗时,如果使用异步方式去同步图片的话又会有延时,不过一般的小图片文件也还好了。使用rsync同步,当数据文件达到一定的量级后,每次rsync扫描会耗时很久也会带来一定的延时性。

发展阶段

当网站达到一定的规模后,对图片服务器的性能和稳定性有一定的要求后,上述NFS图片服务架构面临着挑战,严重的依赖NFS,而且系统存在单点机器容易出现故障,需要对整体架构进行升级。于是出现了上图图片服务器架构,出现了分布式的图片存储。

其实现的具体思路如下:

1)用户上传图片到web服务器后,web服务器处理完图片,然后再由前端web服务器把图片post到到[图片1]、[图片2]…[图片N]其中的一个,图片服务器接收到post过来的图片,然后把图片写入到本地磁盘并返回对应成功状态码。前端web服务器根据返回状态码决定对应操作,如果成功的话,处理生成各尺寸的缩略图、打水印,把图片服务器对应的ID和对应图片路径写入DB数据库。

2) 上传控制

我们需要调节上传时,只需要修改web服务器post到的目的图片服务器的ID,就可以控制上传到哪台图片存储服务器,对应的图片存储服务器只需要安装nginx同时提供一个python或者php服务接收并保存图片,如果不想不想开启python或者php服务,也可以编写一个nginx扩展模块。

3) 用户访问流程

用户访问页面的时候,根据请求图片的URL到对应图片服务器去访问图片。

如: http://imgN.xxx.com/image1.jpg

此阶段的图片服务器架构,增加了负载均衡和分布式图片存储,能够在一定程度上解决并发访问量高和存储量大的问题。负载均衡在有一定财力的情况下可以考虑F5硬负载,当然也可以考虑使用开源的LVS软负载(同时还可开启缓存功能)。此时将极大提升访问的并发量,可以根据情况随时调配服务器。当然此时也存在一定的瑕疵,那就是可能在多台Squid上存在同一张图片,因为访问图片时可能第一次分到squid1,在LVS过期后第二次访问到squid2或者别的,当然相对并发问题的解决,此种少量的冗余完全在我们的允许范围之内。在该系统架构中二级缓存可以使用squid也可以考虑使用varnish或者traffic server,对于cache的开源软件选型要考率以下几点

1)性能:varnish本身的技术上优势要高于squid,它采用了“Visual Page Cache”技术,在内存的利用上,Varnish比Squid具有优势,它避免了Squid频繁在内存、磁盘中交换文件,性能要比Squid高。varnish是不能cache到本地硬盘上的。还有强大的通过Varnish管理端口,可以使用正则表达式快速、批量地清除部分缓存。nginx是用第三方模块ncache做的缓冲,其性能基本达到varnish,但在架构中nginx一般作为反向(静态文件现在用nginx的很多,并发能支持到2万+)。在静态架构中,如果前端直接面对的是cdn活着前端了4层负载的话,完全用nginx的cache就够了。

2)避免文件系统式的缓存,在文件数据量非常大的情况下,文件系统的性能很差,像squid,nginx的proxy_store,proxy_cache之类的方式缓存,当缓存的量级上来后,性能将不能满足要求。开源的traffic server直接用裸盘缓存,是一个不错的选择,国内大规模应用并公布出来的主要是淘宝,并不是因为它做的差,而是开源时间晚。Traffic Server 在 Yahoo 内部使用了超过 4 年,主要用于 CDN 服务,CDN 用于分发特定的HTTP 内容,通常是静态的内容如图片、JavaScript、CSS。当然使用leveldb之类的做缓存,我估计也能达到很好的效果。

3)稳定性:squid作为老牌劲旅缓存,其稳定性更可靠一些,从我身边一些使用者反馈来看varnish偶尔会出现crash的情况。Traffic Server在雅虎目前使用期间也没有出现已知的数据损坏情况,其稳定性相对也比较可靠,对于未来我其实更期待Traffic Server在国内能够拥有更多的用户。

以上图片服务架构设计消除了早期的NFS依赖以及单点问题,时能够均衡图片服务器的空间,提高了图片服务器的安全性等问题,但是又带来一个问题是图片服务器的横向扩展冗余问题。只想在普通的硬盘上存储,首先还是要考虑一下物理硬盘的实际处理能力。是 7200 转的还是 15000 转的,实际表现差别就很大。至于文件系统选择xfs、ext3、ext4还是reiserFs,需要做一些性能方面的测试,从官方的一些测试数据来看,reiserFs更适合存储一些小图片文件。创建文件系统的时候 Inode 问题也要加以考虑,选择合适大小的 inode size ,因为Linux 为每个文件分配一个称为索引节点的号码inode,可以将inode简单理解成一个指针,它永远指向本文件的具体存储位置。一个文件系统允许的inode节点数是有限的,如果文件数量太多,即使每个文件都是0字节的空文件,系统最终也会因为节点空间耗尽而不能再创建文件,因此需要在空间和速度上做取舍,构造合理的文件目录索引。

云存储阶段

2011年李彦宏在百度联盟峰会上就提到过互联网的读图时代已经到来,图片服务早已成为一个互联网应用中占比很大的部分,对图片的处理能力也相应地变成企业和开发者的一项基本技能,图片的下载和上传速度显得更加重要,要想处理好图片,需要面对的三个主要问题是:大流量、高并发、海量存储。

阿里云存储服务(OpenStorageService,简称OSS),是阿里云对外提供的海量,安全,低成本,高可靠的云存储服务。用户可以通过简单的 REST接口,在任何时间、任何地点上传和下载数据,也可以使用WEB页面对数据进行管理。同时,OSS提供Java、Python、PHP SDK,简化用户的编程。基于OSS,用户可以搭建出各种多媒体分享网站、网盘、个人企业数据备份等基于大规模数据的服务。在以下图片云存储主要以阿里云的云存储OSS为切入点介绍,上图为OSS云存储的简单架构示意图。

真正意义上的“云存储”,不是存储而是提供云服务,使用云存储服务的主要优势有以下几点:

1)用户无需了解存储设备的类型、接口、存储介质等。

2)无需关心数据的存储路径。

3)无需对存储设备进行管理、维护。

4)无需考虑数据备份和容灾

5)简单接入云存储,尽情享受存储服务。

架构模块组成

1)KV Engine

OSS中的Object源信息和数据文件都是存放在KV Engine上。在6.15的版本,V Engine将使用0.8.6版本,并使用为OSS提供的OSSFileClient。

2)Quota

此模块记录了Bucket和用户的对应关系,和以分钟为单位的Bucket资源使用情况。Quota还将提供HTTP接口供Boss系统查询。

3)安全模块

安全模块主要记录User对应的ID和Key,并提供OSS访问的用户验证功能。

OSS术语名词汇

1 )Access Key ID & Access Key Secret (API密钥)

用户注册OSS时,系统会给用户分配一对Access Key ID & Access Key Secret,称为ID对,用于标识用户,为访问OSS做签名验证。

2) Service

OSS提供给用户的虚拟存储空间,在这个虚拟空间中,每个用户可拥有一个到多个Bucket。

3) Bucket

Bucket是OSS上的命名空间;Bucket名在整个OSS中具有全局唯一性,且不能修改;存储在OSS上的每个Object必须都包含在某个Bucket中。一个应用,例如图片分享网站,可以对应一个或多个Bucket。一个用户最多可创建10个Bucket,但每个Bucket中存放的Object的数量和大小总和没有限制,用户不需要考虑数据的可扩展性。

4) Object

在OSS中,用户的每个文件都是一个Object,每个文件需小于5TB。Object包含key、data和user meta。其中,key是Object的名字;data是Object的数据;user meta是用户对该object的描述。

其使用方式非常简单,如下为java sdk:

OSSClient ossClient = new OSSClient(accessKeyId,accessKeySecret);

PutObjectResult result = ossClient.putObject(bucketname, bucketKey, inStream, new ObjectMetadata());

执行以上代码即可将图片流上传至OSS服务器上。

图片的访问方式也非常简单其url为:http://bucketname.oss.aliyuncs.com/bucketKey

分布式文件系统

用分布式存储有几个好处,分布式能自动提供冗余,不需要我们去备份,担心数据安全,在文件数量特别大的情况下,备份是一件很痛苦的事情,rsync扫一次可能是就是好几个小时,还有一点就是分布式存储动态扩容方便。当然在国内的其他一些文件系统里,TFS(http://code.taobao.org/p/tfs/src/)和FASTDFS也有一些用户,但是TFS的优势更是针对一些小文件存储,主要是淘宝在用。另外FASTDFS在并发高于300写入的情况下出现性能问题,稳定性不够友好。OSS存储使用的是阿里云基于飞天5k平台自主研发的高可用,高可靠的分布式文件系统盘古。分布式文件系统盘古和Google的GFS类似,盘古的架构是Master-Slave主从架构,Master负责元数据管理,Sliave叫做Chunk Server,负责读写请求。其中Master是基于Paxos的多Master架构,一个Master死了之后,另外一个Master可以很快接过去,基本能够做到故障恢复在一分钟以内 。文件是按照分片存放,每个会分三个副本,放在不同的机架上,最后提供端到端的数据校验。

HAPROXY负载均衡

基于haproxy的自动hash架构 ,这是一种新的缓存架构,由nginx作为最前端,代理到缓存机器。 nginx后面是缓存组,由nginx经过url hash后将请求分到缓存机器。

这个架构方便纯squid缓存升级,可以在squid的机器上加装nginx。 nginx有缓存的功能,可以将一些访问量特大的链接直接缓存在nginx上,就不用经过多一次代理的请求,能够保证图片服务器的高可用、高性能。比如favicon.ico和网站的logo。 负载均衡负责OSS所有的请求的负载均衡,后台的http服务器故障会自动切换,从而保证了OSS的服务不间断。

CDN

阿里云CDN服务是一个遍布全国的分布式缓存系统,能够将网站文件(如图片或JavaScript代码文件)缓存到全国多个城市机房中的服务器上,当一个用户访问你的网站时,会就近到靠近TA的城市的服务器上获取数据,这样最终用户访问你的服务速度会非常快。

阿里云CDN服务在全国部署超过100个节点,能提供给用户优良的网络加速效果。当网站业务突然爆发增长时,无需手忙脚乱地扩容网络带宽,使用CDN服务即可轻松应对。和OSS服务一样,使用CDN,需要先在aliyun.com网站上开通CDN服务。开通后,需要在网站上的管理中心创建你的distribution(即分发频道),每个distribution由两个必须的部分组成:distribution ID和源站地址。

使用阿里云OSS和CDN可以非常方便的针对每个bucket进行内容加速,因为每个bucket对应一个独立的二级域名,针对每个文件进行CDN删除,简单、经济地解决服务的存储和网络问题,毕竟大多数网站或应用的存储和网络带宽多半是被图片或视频消耗掉的。

从整个业界来看,最近这样的面向个人用户的云存储如国外的DropBox和Box.net非常受欢迎,国内的云存储目前比较不错的主要有七牛云存储和又拍云存储。

上传下载分而治之

图片服务器的图片下载比例远远高于上传比例,业务逻辑的处理也区别明显,上传服器对图片重命名,记录入库信息,下载服务器对图片添加水印、修改尺寸之类的动态处理。从高可用的角度,我们能容忍部分图片下载失败,但绝不能有图片上传失败,因为上传失败,意味着数据的丢失。上传与下载分开,能保证不会因下载的压力影响图片的上传,而且还有一点,下载入口和上传入口的负载均衡策略也有所不同。上传需要经过Quota Server记录用户和图片的关系等逻辑处理,下载的逻辑处理如果绕过了前端缓存处理,穿透后端业务逻辑处理,需要从OSS获取图片路径信息。近期阿里云会推出基于CDN就近上传的功能,自动选择离用户最近的CDN节点,使得数据的上传下载速度均得到最优化。相较传统IDC,访问速度提升数倍。

图片防盗链处理

如果服务不允许防盗链,那么访问量会引起带宽、服务器压力等问题。比较通用的解决方案是在nginx或者squid反向代理软件上添加refer ACL判断,OSS也提供了基于refer的防盗链技术。当然OSS也提供了更为高级的URL签名防盗链,其其实现思路如下:

首先,确认自己的bucket权限是private,即这个bucket的所有请求必须在签名认证通过后才被认为是合法的。然后根据操作类型、要访问的bucket、要访问的object以及超时时间,动态地生成一个经过签名的URL。通过这个签名URL,你授权的用户就可以在该签名URL过期时间前执行相应的操作。

签名的Python代码如下:

h=hmac.new(“OtxrzxIsfpFjA7SwPzILwy8Bw21TLhquhboDYROV”, “GET\n\n\n1141889120\n/oss-example/oss-api.jpg”,sha);

urllib.quote_plus (base64.encodestring(h.digest()).strip());

其中method可以是PUT、GET、HEAD、DELETE中的任意一种;最后一个参数“timeout”是超时的时间,单位是秒。一个通过上面Python方法,计算得到的签名URL为:

http://oss-example.oss-cn-hangzhou.aliyuncs.com/oss-api.jpg?OSSAccessKeyId=44CF9590006BF252F707&Expires=1141889120&Signature=vjbyPxybdZaNmGa%2ByT272YEAiv4%3D

通过这种动态计算签名URL的方法,可以有效地保护放在OSS上的数据,防止被其他人盗链。

图片编辑处理API

对于在线图片的编辑处理,GraphicsMagick(GraphicsMagick(http://www.graphicsmagick.org/))对于从事互联网的技术人员应该不会陌生。GraphicsMagick是从 ImageMagick 5.5.2 分支出来的,但是现在他变得更稳定和优秀,GM更小更容易安装、GM更有效率、GM的手册非常丰富GraphicsMagick的命令与ImageMagick基本是一样的。

GraphicsMagick 提供了包括裁、缩放、合成、打水印、图像转换、填充等非常丰富的接口API,其中的开发包SDK也非常丰富,包括了JAVA(im4java)、C、C++、Perl、PHP、Tcl、Ruby等的调用,支持超过88中图像格式,包括重要的DPX、GIF、JPEG、JPEG-2000、PNG、PDF、PNM和TIFF,GraphicsMagick可以再绝大多数的平台上使用,Linux、Mac、Windows都没有问题。但是独立开发这些图片处理服务,对服务器的IO要求相对要高一些,而且目前这些开源的图片处理编辑库,相对来说还不是很稳定,笔者在使用GraphicsMagick 的时候就遇到了tomcat 进程crash情况,需要手动重启tomcat服务。

阿里云目前已经对外开放图片处理API,包括了大多数常用处理解决方案:缩略图、打水印、文字水印、样式、管道等。开发者可以非常方便的使用如上图片处理方案,希望越来越多的开发者能够基于OSS开放出更多优秀的产品。

架构高性能海量图片服务器的技术要素 - 北游运维 - 开源中国社区

在笔者的另一篇文章《nginx性能改进一例》有讲到,在图片规模比大的情况,nginx处理能力受制于文件系统的io,意味着,在大规模图片的场景,如果运维还依旧采用传统文件系统的方式,无论是备份成本,还是前端成本,将是无法去衡量,不要去指望调优一点文件系统的一些参数,能带来多大的性能收益,也不要去目录hash+rewrite的方式,改进不大,因为新版的文件系统默认开启了dir_index,解决了同一个目录下文件过多而过慢的问题。不过还有一种方案就是采购SSD盘、fusion-io卡之类高性能的硬件去解决随机io,当然你得容忍备份的痛苦。

先看一下架构图逻辑图,这也是现在各大公司采用的方式。

这个是一个大致逻辑图,具体布署是根据模块的性能消耗类型去混合部署。

第一点,分布存储的必要性:存储原始图片,用分布式存储有几个好处,分布式能自动提供冗余,不需要我们去备份,担心数据安全,在文件数量特别大的情况下,备份是一件很痛苦的事情,rsync扫一次可能是就是好几个小时。还有一点就是分布式存储动态扩容方便。不过唯一遗憾的是目前适合于存小文件系统比较少,我了解的只有fastdfs,以及淘宝的tfs,还有mongodb这几个,tfs经历过淘宝那种规模的考验,文档和工具都太少,如果能驾驭tfs,我觉得值得尝试一下。。

第二点,上传和下载分开处理:通常图片服务器上传的压力与下载的压力相差很大,大多数的公司都是下载的压力是上传压力的n倍。业务逻辑的处理也区别明显,上传服务器对图片重命名,记录入库信息,下载服务器对图片添加水印、修改尺寸之类的动态处理。从数据的角度,我们能容忍部分图片下载失败,但绝不能有图片上传失败,因为上传失败,意味着数据的丢失。上传与下载分开,能保证不会因下载的压力影响图片的上传,而且还有一点,下载入口和上传入口的负载均衡策略也不同,下面有说明。

第三点,使用cache做缓层:分布式存储解决了存储安全问题,但性能问题还需要用cache去解决,直接从分布式存储取文件给用户提供服务,每秒的request高不到哪里去,像淘宝之类的网站,都做了二层cache。对于cache的开源软件选型要考虑二点,1,缓存的量级大,尽可能让热点图片缓存在cache中,像varnish之类的,纯内存的cache,虽然性能很好,但能cache的量级很限于内存,用来做图片的缓存不太适合;2,避免文件系统式的缓存,在我的另一篇文章中有测过,在文件量非常的情况下,文件系统的型能很差,像squid,nginx的proxy_store,proxy_cache之类的方式缓存,当缓存的量级上来后,性能将不能满足要求。开源的traffic server直接用裸盘缓存,是一个不错的选择,当然使用leveldb之类的做缓存,我估计也能达到很好的效果。这里说明一下cache缓存最好不要去依赖第三方CDN,现在很多第三的CDN业务,不仅提供内容分发外,还额外提供第一个二级缓存之类的服务,但这里面就一个最大的风险就是如果第三调整带来的回源压力暴增,此时你的架构能否支撑,需要认真评估一下,如果成本允许,服务控制在自己手中最靠谱。

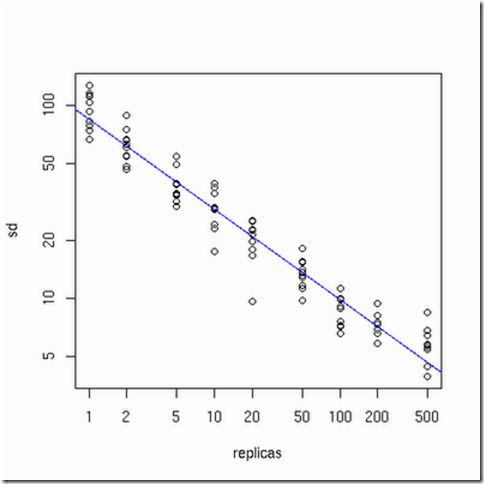

第四点,使用一致性哈希(consistent hashing)做下载负载均衡:虽公司的业务的增加带来流量的增加,一个阶段后,一个cache通常不能解决问题,这时扩容cache就是常做的一件事,传统的哈希不足就是每扩容一次,哈希策略将重新分配,大部分cache将失效,带来的问题是后端压力暴增。对uri进行一性能哈希负载均衡,能避免增加或者减少cache引起哈希策略变化,目前大多开源的负载均衡软件都有这个功能,像haproxy都有,至于一致性哈希的最优化,可以参考一下下图(摘自网上的一张图,表示的是怎样的物理节点和虚拟节点数量关系,哈希最均匀)。

第五点,利用CDN分发和多域名访问入口:想要获得好的用户体验,利用CDN的快速分发是有必要的,从成本上考虑可以购买使用第三方的CDN平台。多域名访问方式,大多的浏览器都对单个域名进行了线程并发限制,采用多域名能够加快图片展示的速度。

关于图片服务器的部署基本算完了,其它的细节性调优这里就不说明了。

大数据处理系列之(一)Java线程池使用 - cstar(小乐) - 博客园

ThreadPoolExecutor有界队列使用

public class ThreadPool {

private final static String poolName = "mypool";

static private ThreadPool threadFixedPool = null;

public ArrayBlockingQueue<Runnable> queue = new ArrayBlockingQueue<Runnable>(2);

private ExecutorService executor;

static public ThreadPool getFixedInstance() {

return threadFixedPool;

}

private ThreadPool(int num) {

executor = new ThreadPoolExecutor(2, 4,60,TimeUnit.SECONDS, queue,new DaemonThreadFactory

(poolName), new ThreadPoolExecutor.AbortPolicy());

}

public void execute(Runnable r) {

executor.execute(r);

}

public static void main(String[] params) {

class MyRunnable implements Runnable {

public void run() {

System.out.println("OK!");

try {

Thread.sleep(10);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

}

int count = 0;

for (int i = 0; i < 10; i++) {

try {

ThreadPool.getFixedInstance().execute(new MyRunnable());

} catch (RejectedExecutionException e) {

e.printStackTrace();

count++;

}

}

try {

log.info("queue size:" + ThreadPool.getFixedInstance().queue.size());

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Reject task: " + count);

}

}

首先我们来看下这段代码几个重要的参数,corePoolSize 为2,maximumPoolSize为4,任务队列大小为2,每个任务平

均处理时间为10ms,一共有10个并发任务。

执行这段代码,我们会发现,有4个任务失败了。这里就验证了我们在上面提到有界队列时候线程池的执行顺序。当新任务在

方法 execute(Runnable) 中提交时, 如果运行的线程少于 corePoolSize,则创建新线程来处理请求。 如果运行的线程多于

corePoolSize 而少于 maximumPoolSize,则仅当队列满时才创建新线程,如果此时线程数量达到maximumPoolSize,并且队

列已经满,就会拒绝继续进来的请求。

现在我们调整一下代码中的几个参数,将并发任务数改为200,执行结果Reject task: 182,说明有18个任务成功了,线程处理

完一个请求后会接着去处理下一个过来的请求。在真实的线上环境中,会源源不断的有新的请求过来,当前的被拒绝了,但只要线

程池线程把当下的任务处理完之后还是可以处理下一个发送过来的请求。

通过有界队列可以实现系统的过载保护,在高压的情况下,我们的系统处理能力不会变为0,还能正常对外进行服务,虽然有些服

务可能会被拒绝,至于如何减少被拒绝的数量以及对拒绝的请求采取何种处理策略我将会在下一篇文章《系统的过载保护》中继续

阐述。

参考文献:

- ThreadPoolExecutor使用与思考(上)-线程池大小设置与BlockedQueue的三种实现区别 http://dongxuan.iteye.com/blog/901689

- ThreadPoolExecutor使用与思考(中)-keepAliveTime及拒绝策略http://dongxuan.iteye.com/blog/902571

- ThreadPoolExecutor源代码

- Java线程池介绍以及简单实例 http://wenku.baidu.com/view/e4543a7a5acfa1c7aa00cc25.html

kettle中通过 时间戳(timestamp)方式 来实现数据库的增量同步操作(一) - Armin - 博客园

这个实验主要思想是在创建数据库表的时候,

通过增加一个额外的字段,也就是时间戳字段,

例如在同步表 tt1 和表 tt2 的时候,

通过检查那个表是最新更新的,那个表就作为新表,而另外的表最为旧表被新表中的数据进行更新。

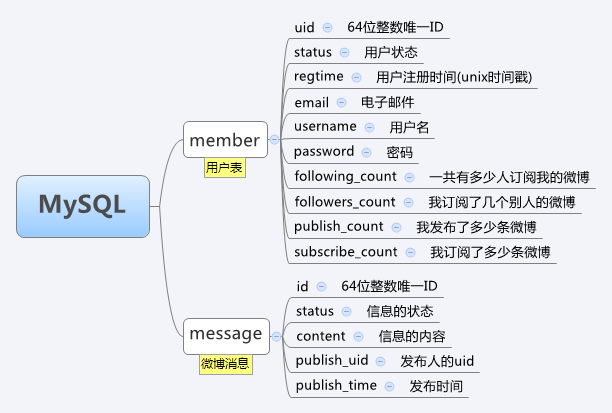

实验数据如下:

mysql database 5.1

test.tt1( id int primary key , name varchar(50) );

mysql.tt2( id int primary key, name varchar(50) );

快照表,可以将其存放在test数据库中,

同样可以为了简便,可以将其创建为temporary 表类型。

数据如图 kettle-1

kettle-1

============================================================

主流程如图 kettle-2

kettle-2

在prepare中,向 tt1,tt2 表中增加 时间戳字段,

由于tt1,tt2所在的数据库是不同的,所以分别创建两个数据库的连接。

prepare

kettle-3

在执行这个job之后,就会在数据库查询的时候看到下面的字段:

kettle-4

然后, 我们来对tt1表做一个 insert 操作 一个update操作吧~

kettle-5

在原表上无论是insert操作还是update操作,对应的updateTime都会发生变更。

如果tt1 表 和 tt2 表中 updateTime 字段为最新时间的话,则说明该表是新表 。

下面只要是对应main_thread的截图:

kettle-6

在这里介绍一下Main的层次:

Main

START

Main.prepare

Main.main_thread

{

START

main_thread.create_tempTable

main_thread.insert_tempTable

main_thread.tt1_tt2_syn

SUCCESS

}

Main.finish

SUCCESS

在main_thread中的过程是这样的:

作为一个局部的整体,使它每隔200s内进行一次循环,

这样的话,如果在其中有指定的表 tt1 或是 tt2 对应被更新或是插入的话,

该表中的updateTime字段就会被捕捉到,并且进行同步。

如果没有更新出现,则会走switch的 default 路线对应的是write to log.

继续循环。

首先创建一个快照表,然后将tt1,tt2表中的最大(最新)时间戳的值插入到快照表中。

然后,通过一个transformation来判断那个表的updateTime值最新,

来选择对应是 tt1表来更新 tt2 还是 tt2 表来更新 tt1 表;

main_thread.create_tempTable.JOB:

main_thread.insert_tempTable.Job:

PS: 对于第二个SQL 应该改成(不修改会出错的)

set @var1 = ( select MAX(updatetime) from tt2);

insert into test.temp values ( 2 , @var1 ) ;

因为conn对应的是连接mysql(数据库实例名称),

但是我们把快照表和tt1 表都存到了test(数据库实例名称)里面。

在上面这个图中对应的语句是想实现,在temp表中插入两行记录元组。

其中id为1 的元组对应的temp.lastTime 字段 是 从tt1 表中选出的 updateTime 值为最新的,

id 为2的元组对应的 temp.lastTime 字段 是 从 tt2 表中选出的 updateTime 值为最新的 字段。

当然 , id 是用来给后续 switch 操作提供参考的,用于标示最新 updateTime 是来自 tt1 还是 tt2,

同样也可以使用 tableName varchar(50) 这种字段 来存放 最新updateTime 对应的 数据库.数据表的名称也可以的。

main_thread.tt1_tt2_syn.Transformation:

首先,创建连接 test 数据库的 temp 表的连接,

选择 temp表中 对应 lastTime 值最新的所在的记录

所对应的 id 号码。

首先将temp中 lastTime 字段进行 降序排列,

然后选择id , 并且将选择记录仅限定成一行。

然后根据id的值进行 switch选择。

在这里LZ很想使用,SQL Executor,

但是它无法返回对应的id值。

但是表输入可以返回对应的id值,

并被switch接收到。

下图是对应 switch id = 1 的时候:即 tt1 更新 tt2

注意合并行比较 的新旧数据源 的选择

和Insert/Update 中的Target table的选择

下图是对应 switch id = 2 的时候:即 tt2 更新 tt1

注意合并行比较 的新旧数据源 的选择

和Insert/Update 中的Target table的选择

但是考虑到增加一个 column 会浪费很多的空间,

所以咋最终结束同步之后使用 finish操作步骤来将该 updateTime这个字段进行删除操作即可。

这个与Main中的prepare的操作是相对应的。

Main.finish

这样的话,实验环境已经搭建好了,

接下来进行,实验的数据测试了,写到下一个博客中。

当然,触发器也是一种同步的好方法,写到后续博客中吧~

时间戳的方式相比于触发器,较为简单并且通用,

但是 数据库表中的时间戳字段,很费空间,并且无法对应删除操作,

也就是说 表中删除一行记录, 该表应该作为新表来更新其余表,但是由于记录删除 时间戳无所依附所以无法记录到。

开源ETL工具kettle系列之增量更新设计技巧 - 技术门户 | ITPUB |

ETL中增量更新是一个比较依赖与工具和设计方法的过程,Kettle中主要提供Insert / Update 步骤,Delete 步骤和Database Lookup 步骤来支持增量更新,增量更新的设计方法也是根据应用场景来选取的,虽然本文讨论的是Kettle的实现方式,但也许对其他工具也有一些帮助。本文不可能涵盖所有的情况,欢迎大家讨论。

应用场景

增量更新按照数据种类的不同大概可以分成:

1. 只增加,不更新,

2. 只更新,不增加

3. 即增加也更新

4. 有删除,有增加,有更新

其中1 ,2, 3种大概都是相同的思路,使用的步骤可能略有不同,通用的方法是在原数据库增加一个时间戳,然后在转换之后的对应表保留这个时间戳,然后每次抽取数据的时候,先读取这个目标数据库表的时间戳的最大值,把这个值当作参数传给原数据库的相应表,根据这个时间戳来做限定条件来抽取数据,抽取之后同样要保留这个时间戳,并且原数据库的时间戳一定是指定默认值为sysdate当前时间(以原数据库的时间为标准),抽取之后的目标数据库的时间戳要保留原来的时间戳,而不是抽取时候的时间。

对于第一种情况,可以使用Kettle的Insert / Update 步骤,只是可以勾选Don’t perform any update选项,这个选项可以告诉Kettle你只会执行Insert 步骤。

对于第二种情况可能比较用在数据出现错误然后原数据库有一些更新,相应的目标数据库也要更新,这时可能不是更新所有的数据,而是有一些限定条件的数据,你可以使用Kettle的Update 步骤来只执行更新。关于如何动态的执行限定条件,可以参考前一篇文章。

第三种情况是最为常见的一种情况,使用的同样是 Kettle的Insert / Update 步骤,只是不要勾选Don’t perform any update 选项。

第四种情况有些复杂,后面专门讨论。

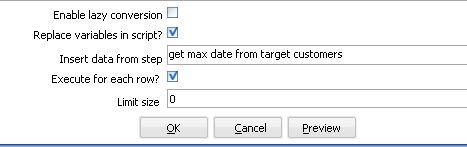

对于第1,2,3种情况,可以参考下面的例子。

这个例子假设原数据库表为customers , 含有一个id , firstname , lastname , age 字段,主键为id , 然后还加上一个默认值为sysdate的时间戳字段。转换之后的结果类似:id , firstname , lastname , age , updatedate . 整个设计流程大概如下:

图1

其中第一个步骤的sql 大概如下模式:

Select max(updatedate) from target_customer ;

你会注意到第二个步骤和第一个步骤的连接是黄色的线,这是因为第二个table input 步骤把前面一个步骤的输出当作一个参数来用,所有Kettle用黄色的线来表示,第二个table input 的sql 模式大概如下:

Select field1 , field2 , field3 from customers where updatedate > ?

后面的一个问号就是表示它需要接受一个参数,你在这个table input 下面需要指定replace variable in script 选项和execute for each row 为选中状态,这样,Kettle就会循环执行这个sql , 执行的次数为前面参数步骤传入的数据集的大小。

图2

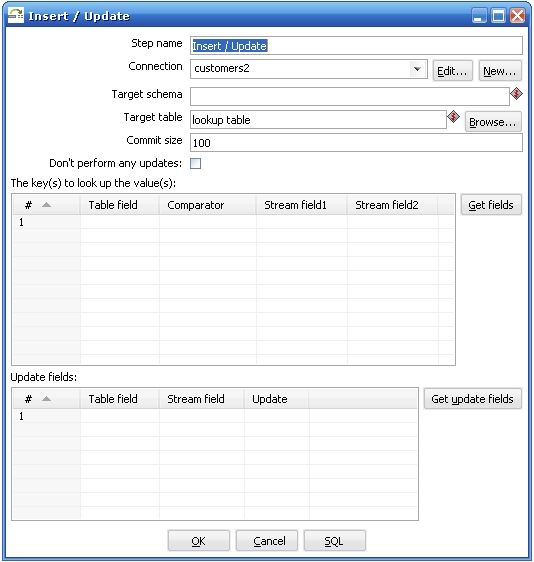

关于第三个步骤执行insert / update 步骤需要特别解释一下,

图3

Kettle执行这个步骤是需要两个数据流对比,其中一个是目标数据库,你在Target table 里面指定的,它放在The keys to look up the values(s) 左边的Table field 里面的,另外一个数据流就是你在前一个步骤传进来的,它放在The keys to look up the value(s) 的右边,Kettle首先用你传进来的key 在数据库中查询这些记录,如果没有找到,它就插入一条记录,所有的值都跟你原来的值相同,如果根据这个key找到了这条记录,kettle会比较这两条记录,根据你指定update field 来比较,如果数据完全一样,kettle就什么都不做,如果记录不完全一样,kettle就执行一个update 步骤。所以首先你要确保你指定的key字段能够唯一确定一条记录,这个时候会有两种情况:

1.维表

2.事实表

维表大都是通过一个主键字段来判断两条记录是否匹配,可能我们的原数据库的主键记录不一定对应目标数据库中相应的表的主键,这个时候原数据库的主键就变成了业务主键,你需要根据某种条件判断这个业务主键是否相等,想象一下如果是多个数据源的话,业务主键可能会有重复,这个时候你需要比较的是根据你自定义生成的新的实际的主键,这种主键可能是根据某种类似与sequence 的生成方式生成的,

事实表在经过转换之后,进目标数据库之前往往都是通过多个外键约束来确定唯一一条记录的,这个时候比较两条记录是否相等都是通过所有的维表的外键决定的,你在比较了记录相等或不等之后,还要自己判断是否需要添加一个新的主键给这个新记录。

上面两种情况都是针对特定的应用的,如果你的转换过程比较简单,只是一个原数据库对应一个目标数据库,业务主键跟代理主键完全相同的时候完全可以不用考虑这么多。

有删除,有增加,有更新

首先你需要判断你是否在处理一个维表,如果是一个维表的话,那么这可能是一个SCD情况,可以使用Kettle的Dimension Lookup 步骤来解决这个问题,如果你要处理的是事实表,方法就可能有所不同,它们之间的主要区别是主键的判断方式不一样。

事实表一般都数据量很大,需要先确定是否有变动的数据处在某一个明确的限定条件之下,比如时间上处在某个特定区间,或者某些字段有某种限定条件,尽量最大程度的先限定要处理的结果集,然后需要注意的是要先根据id 来判断记录的状态,是不存在要插入新纪录,还是已存在要更新,还是记录不存在要删除,分别对于id 的状态来进行不同的操作。

处理删除的情况使用Delete步骤,它的原理跟Insert / Update 步骤一样,只不过在找到了匹配的id之后执行的是删除操作而不是更新操作,然后处理Insert / Update 操作,你可能需要重新创建一个转换过程,然后在一个Job 里面定义这两个转换之间的执行顺序。

如果你的数据变动量比较大的话,比如超过了一定的百分比,如果执行效率比较低下,可以适当考虑重新建表。

另外需要考虑的是维表的数据删除了,对应的事实表或其他依赖于此维表的表的数据如何处理,外键约束可能不太容易去掉,或者说一旦去掉了就可能再加上去了,这可能需要先处理好事实表的依赖数据,主要是看你如何应用,如果只是简单的删除事实表数据的话还比较简单,但是如果需要保留事实表相应记录,可以在维表中增加一条记录,这条记录只有一个主键,其他字段为空,当我们删除了维表数据后,事实表的数据就更新指向这条空的维表记录。

定时执行增量更新

可能有时候我们就是定时执行更新操作,比如每天或者一个星期一次,这个时候可以不需要在目标表中增加一个时间戳字段来判断ETL进行的最大时间,直接在取得原数据库的时间加上限定条件比如:

Startdate > ? and enddate < ?

或者只有一个startdate

Startdate > ? (昨天的时间或者上个星期的时间)

这个时候需要传一个参数,用get System Info 步骤来取得,而且你还可以控制时间的精度,比如到天而不是到秒的时间。

当然,你也需要考虑一下如果更新失败了怎么处理,比如某一天因为某种原因没有更新,这样可能这一天的记录需要手工处理回来,如果失败的情况经常可能发生,那还是使用在目标数据库中增加一个时间字段取最大时间戳的方式比较通用,虽然它多了一个很少用的字段。

执行效率和复杂度

删除和更新都是一项比较耗费时间的操作,它们都需要不断的在数据库中查询记录,执行删除操作或更新操作,而且都是一条一条的执行,执行效率低下也是可以预见的,尽量可能的缩小原数据集大小。减少传输的数据集大小,降低ETL的复杂程度

时间戳方法的一些优点和缺点

优点: 实现方式简单,很容易就跨数据库实现了,运行起来也容易设计

缺点: 浪费大量的储存空间,时间戳字段除ETL过程之外都不被使用,如果是定时运行的,某一次运行失败了,就有可能造成数据有部分丢失.

其他的增量更新办法:

增量更新的核心问题在与如何找出自上次更新以后的数据,其实大多数数据库都能够有办法捕捉这种数据的变化,比较常见的方式是数据库的增量备份和数据复制,利用数据库的管理方式来处理增量更新就是需要有比较好的数据库管理能力,大多数成熟的数据库都提供了增量备份和数据复制的方法,虽然实现上各不一样,不过由于ETL的增量更新对数据库的要求是只要数据,其他的数据库对象不关心,也不需要完全的备份和完全的stand by 数据库,所以实现方式还是比较简单的.,只要你创建一个与原表结构类似的表结构,然后创建一个三种类型的触发器,分别对应insert , update , delete 操作,然后维护这个新表,在你进行ETL的过程的时候,将增量备份或者数据复制停止,然后开始读这个新表,在读完之后将这个表里面的数据删除掉就可以了,不过这种方式不太容易定时执行,需要一定的数据库特定的知识。如果你对数据的实时性要求比较高可以实现一个数据库的数据复制方案,如果对实时性的要求比较低,用增量备份会比较简单一点。

几点需要注意的地方:

1.触发器

无论是增量备份还是数据复制,如果原表中有触发器,在备份的数据库上都不要保留触发器,因为我们需要的不是一个备份库,只是需要里面的数据,最好所有不需要的数据库对象和一些比较小的表都不用处理。

2.逻辑一致和物理一致

数据库在数据库备份和同步上有所谓逻辑一致和物理一致的区别,简单来说就是同一个查询在备份数据库上和主数据库上得到的总的数据是一样的,但是里面每一条的数据排列方式可能不一样,只要没有明显的排序查询都可能有这种情况(包括group by , distinct , union等 ),而这可能会影响到生成主键的方式,需要注意在设计主键生成方式的时候最好考虑这一点,比如显式的增加order 排序. 避免在数据出错的时候,如果需要重新读一遍数据的时候主键有问题.

总结

增量更新是ETL中一个常见任务,对于不同的应用环境可能采用不同的策略,本文不可能覆盖所有的应用场景,像是多个数据源汇到一个目标数据库,id生成策略,业务主键和代理主键不统一等等,只是希望能给出一些思路处理比较常见的情况,希望能对大家有所帮助。

相关文章:

开源ETL工具kettle系列之建立缓慢增长维

http://tech.cms.it168.com/db/2008-03-21/200803211716994.shtml

开源ETL工具kettle系列之动态转换

http://tech.cms.it168.com/o/2008-03-17/200803171550713.shtml

开源ETL工具kettle系列之在应用程序中集成

http://tech.it168.com/db/2008-03-19/200803191510476.shtml

开源ETL工具kettle系列之常见问题

http://tech.it168.com/db/2008-03-19/200803191501671.shtml

Web services performance tuning - jboss web services - JBoss application server tutorials

web service performance tip #1

Use coarse grained web services

One of the biggest mistakes that many developers make when approaching web services is defining operations and messages that are too fine-grained. By doing so, developers usually define more than they really need. You need to ensure that your web service is coarse-grained and that the messages being defined are business oriented and not programmer's oriented.

The reason why top-down webservices are the pure web services is really this: you define at first the business and then the programming interface.

Don't define a web service operation for every Java method you want to expose. Rather, you should define an operation for each action you need to expose.

and this is a coarse grained web service:

for the sake of simplicity we don't have added any transaction commit/rollback however what we want to stress is that the first kind of web service is a bad design practice at first and then also will yield poor performance because of 3 round trips to the server carrying SOAP packets.

web service performance tip #2

Use primitive types, String or simple POJO as parameters and return type

Web services have been defined from the grounds up to be interoperable components. That is you can return both primitive types and Objects. How do a C# client is able to retrieve a Java type returned by a Web Service or viceversa ? that's possible because the Objects moving from/to the Webservices are flattened into XML.

As you can imagine the size of the Object which is the parameter or the returntype has a huge impact on the performance. Be careful with Objects which contain large Collections as fields- they are usually responsible of web services bad performance. If you have collections or fields which are not useful in your response mark them as transient fields.

webservice performance tip #3

Evaluate carefully how much data your need to return

Consider returning only subsets of data if the Database queries are fast but the graph of Object is fairly complex. Example:

On the other hand if your bottleneck is the Database Connectivity reduce the trips to the Service and try to return as much data as possible.

web service performance tip #4

Examine the SOAP packet

This tip is the logic continuation of the previous one: if you are dealing with complex Object types always check what is actually moving around the network. There are many ways to sniff a SOAP packet, from a tcp-proxy software which analyze the packets between two destinations. For example Apache delivers a simple tcp-monitoring utility which can be used for this purpose :

http://ws.apache.org/commons/tcpmon/

Here's how to debug SOAP messages with jboss:

http://www.mastertheboss.com/en/jboss-howto/49-jboss-http/178-how-to-monitor-webservices-soap-messages-.html

web service performance tip #5

Cache on the client when possible

If your client application requests the same information repeatedly from the server, you'll eventually realize the server's performance, and consequently your application's response time, is not fast enough.

Depending on the frequency of the messaging and the type of data, it could be necessary to cache data on the client.

This approach however needs to address one important issue: how do we know that the data we are requesting is consistent ? the technical solution to this is inverting the order of elements: when the Data in our RDBMS has become inconsistent a Notification needs to be issued so that the Client cache is refreshed. In this article you'll find an elegant approach to this topic.

http://www.javaworld.com/javaworld/jw-03-2002/jw-0308-soap.html?page=2

web service performance tip #6

Evaluate asynchronous messaging model

In some situations, responses to Web service requests are not provided immediately, but rather sometime after the initial request transactions complete. This might be due to the fact that the transport is slow and/or unreliable, or the processing is complex and/or long-running. In this situation you should consider an asynchronous messaging model.

The new WebService framework, JAX-WS, supports two models for asynchronous request-response messaging: polling and callback. Polling enables client code to repeatedly check a response object to determine whether a response has been received. Alternatively, the callback approach defines a handler that processes the response asynchronously when it is available.

Consider using asynchronous Web methods is particularly useful if you perform I/O-bound operations such as:

- Accessing streams

- File I/O operations

- Calling another Web service

In the this article I showed how to achieve asynchronous web services using JAX-WS 2.0

http://www.mastertheboss.com/en/web-interfaces/111-asynchronous-web-services-with-jboss-ws.html

web service performance tip #6

Use JAX-WS web services

The Webservices based on JAX-WS perform much better then JAX-RPC earlier Web services.

This is due to the fact that JAX-WS use JAXB for data type marshalling/unmarshalling which has a significant performance advantage over DOM based parsers.

For a detailed comparison benchmark check here:

http://java.sun.com/developer/technicalArticles/WebServices/high_performance/

webservice performance tip #7

Do you need RESTful web services ?

RESTful web services are stateless client-server architecture in which the web services are viewed as resources and can be identified by their URLs.

REST as a protocol does not define any form of message envelope, while SOAP does have this standard. So at first you don't have the overhead of headers and additional layers of SOAP elements on the XML payload. That's not a big thing, even if for limited-profile devices such as PDAs and mobile phones it can make a difference.

However the real performance difference does not rely on wire speed but with cachability. REST suggests using the web's semantics instead of trying to tunnel over it via XML, so RESTful web services are generally designed to correctly use cache headers, so they work well with the web's standard infrastructure like caching proxies and even local browser caches.

RESTFUL web services are not a silver bullet, they are adviced in these kind of scenarios:

REST web services are a good choices when your web services are completely stateless.Since there is no formal way to describe the web services interface, it's required that the service producer and service consumer have a mutual understanding of the context and content being passed along.

On the other hand a SOAP-based design may be appropriate when a formal contract must be established to describe the interface that the web service offers. The Web Services Description Language (WSDL) describes the details such as messages, operations, bindings, and location of the web service. Another scenario where it's mandatory to use SOAP based web services is an architecture that needs to handle asynchronous processing and invocation.

web service performance tip #8

Eager initialization

JBossWS may perform differently during the first method invocation of each service and the following ones when huge wsdl contracts (with hundreds of imported xml schemas) are referenced. This is due to a bunch of operations performed internally during the first invocation whose resulting data is then cached and reused during the following ones. While this is usually not a problem, you might be interested in having almost the same performance on each invocation. This can be achieved setting the org.jboss.ws.eagerInitializeJAXBContextCache system property to true, both on server side (in the JBoss start script) and on client side (a convenient constant is available in org.jboss.ws.Constants). The JAXBContext creation is usually responsible for most of the time required by the stack during the first invocation; this feature makes JBossWS try to eagerly create and cache the JAXB contexts before the first invocation is handled.

web service performance tip #9

Use Literal Message Encoding for Parameter Formatting

The encoded formatting of the parameters in messages creates larger messages than literal message encoding (literal message encoding is the default). In general, you should use literal format unless you are forced to switch to SOAP encoding for interoperability with a Web services platform that does not support the literal format. Here's an example of an RPC/encoded SOAP message for helloWorld

Here the operation name appears in the message, so the receiver has an easy time dispatching this message to the implementation of the operation.The type encoding info (such as xsi:type="xsd:int") is usually just overhead which degrades throughput performance. And this is the equivalent Document/literal wrapped SOAP message :

Analyzing Thread Dumps in Middleware - Part 2

This posting is the second section in the series Analyzing Thread Dumps in Middleware

This section details with when, how to capture and analyze thread dumps with special focus on WebLogic Application Server related thread dumps. Subsequent sections will deal with more real-world samples and tools for automatic analysis of Thread Dumps.

The Diagnosis

Everyone must have gone through periodic health checkups. As a starting point, Doctors always order for a blood test. Why this emphasis on blood test? Can't they just go by the patient's feedback or concerns? What is special about blood test?

Blood Test help via:

- Capturing a snapshot of the overall health of the body (cells/antibodies/...)

- Detecting Abnormalities (low/high hormones, elevated blood sugar levels)

- Identifying and start focusing on the problem areas with prevention and treatment.

The Thread Dump is the equivalent of a blood test for a JVM. It is a state dump of the running JVM and all its parts that are executing at that moment in time.

- It can help identify current execution patterns and help focus attention on relevant parts

- There might be 100s of components and layers but difficult to identify what is getting invoked the most and where to focus attention. A thread dump taken during load will highlight the most executed code paths.

- Dependencies and bottlenecks in code and application design

- Show pointers for enhancements and optimizations

When to capture Thread Dumps

Under what conditions should we capture thread dumps? Anytime or specific times? Capturing thread dumps are ideal for following conditions:

- To understand what is actively executed in the system when under load

- When system is sluggish or slow or hangs

- Java Virtual Process running but Server or App itself not responding

- Pages take forever to load

- Response time increases

- Application and or Server behavior erratic

- Just to observe hot spots (frequently executed code) under load

- For Performance tuning

- For Dependency analysis

- For bottleneck identification and resolution

- To capture snapshot of running state in server

The cost associated with capturing thread dumps is close to near zero; Java Profilers and other tools can consume anywhere from 5 to 20% overhead while a thread dump is more of a snapshot of threads which requires no real heavy weight processing on part of the JVM. There can be some cost only if there are too many interrupts to the JVM within real short intervals (like dumps forever every second or so).

How to capture Thread Dumps

There are various options to capture thread dumps. JVM vendor tools allow capture and gather of thread dumps. Operating System interrupt signals to the process can also be used in generating thread dumps.

Sun Hotspot's jstack tool (under JAVA_HOME or JRE Home/bin) can generate thread dumps given the jvm process id. Similarly, jrcmd (from JRockit Mission Control) can generate thread dumps given a jvm process id and using the print_threads command. Both require to be run on the same operating system as the jvm process and both dump the output to the user shell.

In Unix, kill -3 <PID> or kill -SIGQUIT<PID> will generate thread dumps on the JVM process. But the output of the thread dump will go to the STDERR. Unless the STDERR was redirected to a log file, the thread dump cannot be saved when issued via kill signals. Ensure the process is always started and STDERR is redirected to a log (best practice to also have STDOUT redirected to same log file as STDERR).

In Windows, Ctrl-Break to the JVM process running in foreground can generate thread dumps. The primary limitation is the process needs to be running in the shell. Process Explorer in windows can also help in generating thread dumps but its much more problematic to get all the thread stacks in one shot. One has to wade through each thread and gets its stack. Another thing to keep in mind is, JVM might ignore interrupts if they were started with -Xrs flag.

WebLogic Application Server allows capture of thread dumps via additional options:

- WLS Admin Console can generate thread dumps. Go to the Server Instance -> Monitoring -> Threads -> Dump Threads option.

- Using weblogic's WLST (WebLogic Scripting Tool) to issue Thread dumps.

- weblogic.Admin THREAD_DUMP command option

But generating thread dumps via Weblogic specific commands is not recommended as the JVM itself might be sluggish or hung and never respond to higher app level commands (including weblogic). Issuing thread dumps via the WLS Admin Console requires the Admin server to be first up and healthy and also in communication with the target server.

Sometimes, even the OS level or JVM vendor specific tools might not be able to generate thread dumps. The causes can include memory thrashing (Out Of Memory - OOM) and thread deaths in the JVM, the system itself under tough constraints (memory/cpu) or the process is unhealthy and cannot really respond to anything in predictable way.

What to Observe

Now that we can capture thread dumps, what to observe? Its always recommended to take multiple thread dumps at close intervals (5 or 6 dumps at 10-15 seconds intervals). Why? A thread dump is a snapshot of threads in execution or various states. Taking multiple thread dumps allows us to peek into the threads as they continue execution.

Compare threads based on thread id or name across successive thread dumps to check for change in execution. Observe change in thread execution across thread dumps. Change in the thread state and or stack content for a given thread across successive thread dumps implies there is progress while no change or absence of progress indicates either a warning condition or something benign.

So, what if a given thread is not showing progress between thread dumps? Its possible the thread is doing nothing and so has no change between dumps. Or its possible its waiting for an event to happen and continues in same state and stack appearance. These might be benign. Or it might be blocked for a lock and continues to languish in the same state as the owner of the lock is still holding on the same lock or someone else got the lock ahead of our target thread. Its good to figure out which of the conditions applies and rule out benign cases.

Identifying Idle or Benign threads

One can use the following as rule of thumb while analyzing Application Server side thread dumps. Some additional pointers for WebLogic Specific threads.

- If the thread stack depth is lesser than 5 or 6, treat it as an idle or benign thread. Why? By default, application server thread will itself take away 2-3 thread stack depths (like someRunnable or Executor.run) and if there is not much stack depth remaining, then its more likely sitting there for some job or condition.

"schedulerFactoryBean_Worker-48" id=105 idx=0x1e4 tid=7392 prio=5 alive, waiting, native_blocked

-- Waiting for notification on: org/quartz/simpl/SimpleThreadPool$WorkerThread@0xd5ddc168[fat lock]

at jrockit/vm/Threads.waitForNotifySignal(JLjava/lang/Object;)Z(Native Method)

at java/lang/Object.wait(J)V(Native Method)

at org/quartz/simpl/SimpleThreadPool$WorkerThread.run(SimpleThreadPool.java:519)

^-- Lock released while waiting: org/quartz/simpl/SimpleThreadPool$WorkerThread@0xd5ddc168[fat lock]

at jrockit/vm/RNI.c2java(JJJJJ)V(Native Method)

-- end of trace

"Agent ServerConnection" id=17 idx=0xa0 tid=7154 prio=5 alive, sleeping, native_waiting, daemon

at java/lang/Thread.sleep(J)V(Native Method)

at com/wily/introscope/agent/connection/ConnectionThread.sendData(ConnectionThread.java:312)

at com/wily/introscope/agent/connection/ConnectionThread.run(ConnectionThread.java:65)

at java/lang/Thread.run(Thread.java:662)

at jrockit/vm/RNI.c2java(JJJJJ)V(Native Method)

-- end of trace

"DynamicListenThread[Default]" id=119 idx=0x218 tid=7465 prio=9 alive, in native, daemon

at sun/nio/ch/ServerSocketChannelImpl.accept0(Ljava/io/FileDescriptor;Ljava/io/FileDescriptor;[Ljava/net/InetSocketAddress;)I(Native Method)

at sun/nio/ch/ServerSocketChannelImpl.accept(ServerSocketChannelImpl.java:145)

^-- Holding lock: java/lang/Object@0xbdc08428[thin lock]

at weblogic/socket/WeblogicServerSocket.accept(WeblogicServerSocket.java:30)

at weblogic/server/channels/DynamicListenThread$SocketAccepter.accept(DynamicListenThread.java:535)

at weblogic/server/channels/DynamicListenThread$SocketAccepter.access$200(DynamicListenThread.java:417)

at weblogic/server/channels/DynamicListenThread.run(DynamicListenThread.java:173)

at java/lang/Thread.run(Thread.java:662)

If its WebLogic specific thread dump,

- Ignore those threads in ExecuteThread.waitForRequest() method call.

"[ACTIVE] ExecuteThread: '3' for queue: 'weblogic.kernel.Default (self-tuning)'" id=71 idx=0x15c tid=10158 prio=9 alive, waiting, native_blocked, daemon

-- Waiting for notification on: weblogic/work/ExecuteThread@0x1755df6d8[fat lock]

at jrockit/vm/Threads.waitForNotifySignal(JLjava/lang/Object;)Z(Native Method)

at jrockit/vm/Locks.wait(Locks.java:1973)[inlined]

at java/lang/Object.wait(Object.java:485)[inlined]

at weblogic/work/ExecuteThread.waitForRequest(ExecuteThread.java:160)[optimized]

^-- Lock released while waiting: weblogic/work/ExecuteThread@0x1755df6d8[fat lock]

at weblogic/work/ExecuteThread.run(ExecuteThread.java:181)

at jrockit/vm/RNI.c2java(JJJJJ)V(Native Method)

-- end of trace

- Ensure the thread that is blocked is waiting for a lock held by another Muxer thread and not a non-Muxer Thread.

"ExecuteThread: '4' for queue: 'weblogic.socket.Muxer'" id=31 idx=0xc8 tid=8777 prio=5 alive, blocked, native_blocked, daemon

-- Blocked trying to get lock: java/lang/String@0x17674d0c8[fat lock]

at jrockit/vm/Threads.waitForUnblockSignal()V(Native Method)

at jrockit/vm/Locks.fatLockBlockOrSpin(Locks.java:1411)[optimized]

at jrockit/vm/Locks.lockFat(Locks.java:1512)[optimized]

at jrockit/vm/Locks.monitorEnterSecondStageHard(Locks.java:1054)[optimized]

at jrockit/vm/Locks.monitorEnterSecondStage(Locks.java:1005)[optimized]

at jrockit/vm/Locks.monitorEnter(Locks.java:2179)[optimized]

at weblogic/socket/EPollSocketMuxer.processSockets(EPollSocketMuxer.java:153)

at weblogic/socket/SocketReaderRequest.run(SocketReaderRequest.java:29)

at weblogic/socket/SocketReaderRequest.execute(SocketReaderRequest.java:42)

at weblogic/kernel/ExecuteThread.execute(ExecuteThread.java:145)

at weblogic/kernel/ExecuteThread.run(ExecuteThread.java:117)

at jrockit/vm/RNI.c2java(JJJJJ)V(Native Method)

-- end of trace

"ExecuteThread: '5' for queue: 'weblogic.socket.Muxer'" id=32 idx=0xcc tid=8778 prio=5 alive, native_blocked, daemon

at jrockit/ext/epoll/EPoll.epollWait0(ILjava/nio/ByteBuffer;II)I(Native Method)

at jrockit/ext/epoll/EPoll.epollWait(EPoll.java:115)[optimized]

at weblogic/socket/EPollSocketMuxer.processSockets(EPollSocketMuxer.java:156)

^-- Holding lock: java/lang/String@0x17674d0c8[fat lock]

at weblogic/socket/SocketReaderRequest.run(SocketReaderRequest.java:29)

at weblogic/socket/SocketReaderRequest.execute(SocketReaderRequest.java:42)

at weblogic/kernel/ExecuteThread.execute(ExecuteThread.java:145)

at weblogic/kernel/ExecuteThread.run(ExecuteThread.java:117)

at jrockit/vm/RNI.c2java(JJJJJ)V(Native Method)

-- end of trace

WebLogic Specific Thread Tagging

WebLogic tags threads internally as Active and Standby based on its internal workmanager and thread pooling implementation. There is another level of tagging based on a thread getting returned to the thread pool or not within a defined time period. If the thread does not get returned within a specified duration its tagged as Hogging and if it exceeds even higher limits (default of 10 minutes), its termed as STUCK. It means the thread is involved in a very long activity which requires some manual inspection.

- Its possible for pollers to get kicked off but never return as they just keep polling in an infinite loop. Examples are the AQ Adapter Poller threads which might wrongly get tagged as STUCK.These are benign in nature.

- Thread is doing a database read or remote invocation due to some user request execution and is tagged STUCK. This implies the thread has never really completed the request for more than 10 minutes and is definitely hung - its possibly doing a socket read on an endpoint that is bad or non-responsive or doing real big remote or local execution which should not be synchronous to begin with. Or it might be blocked for a resource which is part of a deadlocked chain and that might be the reason for the non-progress.

Analyzing a Thread Stack

The java thread stack content in a thread dump are always in text/ascii format enabling a quick read without any tools. Almost invariably, the thread stack is in English which lets the user read the package, classname and method call. These three apsects help us understand the general overview of the call the thread is executing, who invoked it and what it is invoking next thereby establishing the caller-callee chain as well as what is getting invoked. The method name gives us a general idea of what the invocation is about. All of these data points can show what is getting executed by the thread even if we haven't written the code or truly understand the implementation.

"[ExecuteThread: '4' for queue: 'weblogic.kernel.Default (self-tuning)'" waiting for lock weblogic.rjvm.ResponseImpl@1b1d86 WAITING

java.lang.Object.wait(Native Method)

weblogic.rjvm.ResponseImpl.waitForData(ResponseImpl.java:84)

weblogic.rjvm.ResponseImpl.getTxContext(ResponseImpl.java:115)

weblogic.rjvm.BasicOutboundRequest.sendReceive(BasicOutboundRequest.java:109)

weblogic.rmi.internal.BasicRemoteRef.invoke(BasicRemoteRef.java:223)

javax.management.remote.rmi.RMIConnectionImpl_1001_WLStub.getAttribute(Unknown Source)

weblogic.management.remote.common.RMIConnectionWrapper$11.run(ClientProviderBase.java:531)

……

weblogic.management.remote.common.RMIConnectionWrapper.getAttribute(ClientProviderBase.java:529)

javax.management.remote.rmi.RMIConnector$RemoteMBeanServerConnection.getAttribute(RMIConnector.java:857)

weblogic.management.mbeanservers.domainruntime.internal.ManagedMBeanServerConnection.getAttribute(ManagedMBeanServerConnection.java:281)

weblogic.management.mbeanservers.domainruntime.internal.FederatedMBeanServerInterceptor.getAttribute(FederatedMBeanServerInterceptor.java:227)

weblogic.management.jmx.mbeanserver.WLSMBeanServerInterceptorBase.getAttribute(WLSMBeanServerInterceptorBase.java:116)

weblogic.management.jmx.mbeanserver.WLSMBeanServerInterceptorBase.getAttribute(WLSMBeanServerInterceptorBase.java:116)

….

weblogic.management.jmx.mbeanserver.WLSMBeanServer.getAttribute(WLSMBeanServer.java:269)

javax.management.remote.rmi.RMIConnectionImpl.doOperation(RMIConnectionImpl.java:1387)

javax.management.remote.rmi.RMIConnectionImpl.access$100(RMIConnectionImpl.java:81)

……

weblogic.rmi.internal.ServerRequest.sendReceive(ServerRequest.java:174)

weblogic.rmi.internal.BasicRemoteRef.invoke(BasicRemoteRef.java:223)

javax.management.remote.rmi.RMIConnectionImpl_1001_WLStub.getAttribute(Unknown Source)

javax.management.remote.rmi.RMIConnector$RemoteMBeanServerConnection.getAttribute(RMIConnector.java:857)

javax.management.MBeanServerInvocationHandler.invoke(MBeanServerInvocationHandler.java:175)

weblogic.management.jmx.MBeanServerInvocationHandler.doInvoke(MBeanServerInvocationHandler.java:504)

weblogic.management.jmx.MBeanServerInvocationHandler.invoke(MBeanServerInvocationHandler.java:380)

$Proxy61.getName(Unknown Source)

com.bea.console.utils.DeploymentUtils.getAggregatedState(DeploymentUtils.java:507)

com.bea.console.utils.DeploymentUtils.getApplicationStatusString(DeploymentUtils.java:2042)

com.bea.console.actions.app.DeploymentsControlTableAction.getCollection(DeploymentsControlTableAction.java:181)

In the above stack trace, we can see the thread belong to the "weblogic.kernel.Default (self-tuning)" group with Thread Name as "ExecuteThread: '4'" and is waiting for a notification on a lock. Some WLS Specific observations based on the stack:

- WLS Admin Console Application attempts to get ApplicationStatus of the Deployments on the remote server.

- MBeanServer.getAttribute() implies it was call to get some attributes (in this case, the application status of deployments) from the MBean Server of a Remote WLS Server instance.

- weblogic.rjvm.ResponseImpl.waitForData() signifies the thread is waiting for a weblogic RMI response from a remote WLS server for the remote MBean Server query call.

"ACTIVE ExecuteThread: '0' for queue: 'weblogic.kernel.Default (self-tuning)'" daemon prio=10 tid=0x00002aabc9820800 nid=0x7a7d runnable 0x000000004290d000 java.lang.Thread.State: RUNNABLE at java.io.FileInputStream.readBytes(Native Method) at java.io.FileInputStream.read(FileInputStream.java:199) at sun.security.provider.NativePRNG$RandomIO.readFully(NativePRNG.java:185) at sun.security.provider.NativePRNG$RandomIO.implGenerateSeed(NativePRNG.java:202) - locked <0x00002aab7b441ee0> (a java.lang.Object) at sun.security.provider.NativePRNG$RandomIO.access$300(NativePRNG.java:108) at sun.security.provider.NativePRNG.engineGenerateSeed(NativePRNG.java:102) at java.security.SecureRandom.generateSeed(SecureRandom.java:495) at com.bea.security.utils.random.AbstractRandomData.ensureInittedAndSeeded(AbstractRandomData.java:83) - locked <0x00002aab6da12720> (a com.bea.security.utils.random.SecureRandomData) at com.bea.security.utils.random.AbstractRandomData.getRandomBytes(AbstractRandomData.java:97) - locked <0x00002aab6da12720> (a com.bea.security.utils.random.SecureRandomData) at com.bea.security.utils.random.AbstractRandomData.getRandomBytes(AbstractRandomData.java:92) at weblogic.management.servlet.ConnectionSigner.signConnection(ConnectionSigner.java:132) - locked <0x00002aaab0611688> (a java.lang.Class for weblogic.management.servlet.ConnectionSigner) at weblogic.ldap.EmbeddedLDAP.getInitialReplicaFromAdminServer(EmbeddedLDAP.java:1268) at weblogic.ldap.EmbeddedLDAP.start(EmbeddedLDAP.java:221) at weblogic.t3.srvr.SubsystemRequest.run(SubsystemRequest.java:64) at weblogic.work.ExecuteThread.execute(ExecuteThread.java:201) at weblogic.work.ExecuteThread.run(ExecuteThread.java:173)

In the above thread stack, it shows WebLogic LDAP is just starting up and is attempting to generate a Random Seed Number using the entropy of the file system. This thread can stay in the same state if there is not much activity in the file system and the server might appear hung at startup.• Solution would be to add -Djava.security.egd=file:/dev/./urandom to java command line arguments to choose a urandom entropy scheme for random number generation for Linux.

Note: this is not advisable to be used for Production systems as the random number is not that truly random.

"[ACTIVE] ExecuteThread: '32' for queue: 'weblogic.kernel.Default (self-tuning)'" daemon prio=10 tid=0x0000002c5ede5000 nid=0x2ca6 waiting for monitor entry [0x000000006a6cc000]

java.lang.Thread.State: BLOCKED (on object monitor)

at weblogic.messaging.kernel.internal.QueueImpl.addReader(QueueImpl.java:1082)

- waiting to lock <0x0000002ac75d1b80> (a weblogic.messaging.kernel.internal.QueueImpl)

at weblogic.messaging.kernel.internal.ReceiveRequestImpl.start(ReceiveRequestImpl.java:178)

at weblogic.messaging.kernel.internal.ReceiveRequestImpl.<init>(ReceiveRequestImpl.java:86)

at weblogic.messaging.kernel.internal.QueueImpl.receive(QueueImpl.java:841)

at weblogic.jms.backend.BEConsumerImpl.blockingReceiveStart(BEConsumerImpl.java:1308)

at weblogic.jms.backend.BEConsumerImpl.receive(BEConsumerImpl.java:1514)

at weblogic.jms.backend.BEConsumerImpl.invoke(BEConsumerImpl.java:1224)

at weblogic.messaging.dispatcher.Request.wrappedFiniteStateMachine(Request.java:961)

at weblogic.messaging.dispatcher.DispatcherServerRef.invoke(DispatcherServerRef.java:276)

at weblogic.messaging.dispatcher.DispatcherServerRef.handleRequest(DispatcherServerRef.java:141)

at weblogic.messaging.dispatcher.DispatcherServerRef.access$000(DispatcherServerRef.java:34)

at weblogic.messaging.dispatcher.DispatcherServerRef$2.run(DispatcherServerRef.java:111)

at weblogic.work.ExecuteThread.execute(ExecuteThread.java:201)

at weblogic.work.ExecuteThread.run(ExecuteThread.java:173)

This thread stack shows WLS adding a JMS Receiver to a Queue.

Following thread stack shows Oracle Service Bus (OSB) Http Proxy service blocked for response from an outbound web service callout and got tagged as STUCK as there was no progress for more than 10 minutes.

[STUCK] ExecuteThread: '53' for queue: 'weblogic.kernel.Default (self-tuning)'" <alive, in native, suspended, waiting, priority=1, DAEMON>

-- Waiting for notification on: java.lang.Object@7ec6c98[fat lock]

java.lang.Object.wait(Object.java:485)

com.bea.wli.sb.pipeline.PipelineContextImpl$SynchronousListener.waitForResponse()

com.bea.wli.sb.pipeline.PipelineContextImpl.dispatchSync()

stages.transform.runtime.WsCalloutRuntimeStep$WsCalloutDispatcher.dispatch()

stages.transform.runtime.WsCalloutRuntimeStep.processMessage()

com.bea.wli.sb.pipeline.StatisticUpdaterRuntimeStep.processMessage()

com.bea.wli.sb.stages.StageMetadataImpl$WrapperRuntimeStep.processMessage()

com.bea.wli.sb.stages.impl.SequenceRuntimeStep.processMessage()

com.bea.wli.sb.pipeline.PipelineStage.processMessage()

com.bea.wli.sb.pipeline.PipelineContextImpl.execute()

com.bea.wli.sb.pipeline.Pipeline.processMessage()

com.bea.wli.sb.pipeline.PipelineContextImpl.execute()

com.bea.wli.sb.pipeline.PipelineNode.doRequest()

com.bea.wli.sb.pipeline.Node.processMessage()

com.bea.wli.sb.pipeline.PipelineContextImpl.execute()

com.bea.wli.sb.pipeline.Router.processMessage()

com.bea.wli.sb.pipeline.MessageProcessor.processRequest()

com.bea.wli.sb.pipeline.RouterManager$1.run()

com.bea.wli.sb.pipeline.RouterManager$1.run()

weblogic.security.acl.internal.AuthenticatedSubject.doAs()

weblogic.security.service.SecurityManager.runAs()

com.bea.wli.sb.security.WLSSecurityContextService.runAs()

com.bea.wli.sb.pipeline.RouterManager.processMessage()

com.bea.wli.sb.transports.TransportManagerImpl.receiveMessage()

com.bea.wli.sb.transports.http.HttpTransportServlet$RequestHelper$1.run()

Identify Hot Spots

What constitutes a Hot Spot? Hot spot is a set of method calls that gets repeatedly invoked by busy running threads (not idle or dormant threads). It can be as simple healthy pattern like multiple threads executing requests to the same set of servlet or application layer or it can be a symptom of bottleneck if multiple threads are blocked waiting for the same lock.

Attempt to capture thread dumps and identify hot spots only after the server has been subjected to some decent loads by which time most of the code have been initialized and executed a few times.

For normal hot spots, try to identify possible optimizations in the code execution.

It might be that the thread is attempting to do a repeat look of JNDI resources or recreation of a resource like JMS Producer/consumer or InitialContext to a remote server.

Cache the JNDI resources, Context and producer/consumer objects. Pool the producers/consumers if possible, reuse the jms connections. Avoid repeated lookups. It can be as simple as resolving an address by doing InetAddress.getAllByName(), cache the results instead of repeat resolutions. Same way, cache XML Parsers or handlers to avoid repeat classloading of xml parsers/factories/handlers/implementations. While caching, ensure to avoid memory leaks if the instances can grow continuously.

Identify Bottlenecks

As Goldfinger says to James Bond in "Goldfinger", Once is happenstance, Twice is coincidence and the third time is enemy action when it comes to threads blocked for same lock. Start navigating the chain of the locks, and try to identify the dependencies between the threads and the nature of locking and try to resolve them as suggested in Reducing Locks Section 1 of this series.

- Identify blocked threads and dependency between the locks and the threads

- Start analyzing dependencies and try to reduce locking

- Resolve the bottlenecks.

This entire exercise can be a bit like pealing onions as one layer of bottleneck might be masking or temporarily resolving a different bottleneck. It requires repetition of analyzing, fixing, testing and analyzing.

Following the chain

Sometimes a thread blocking others from obtaining a lock might itself be on blocked list for yet another lock. In those cases, one should navigate the chain to identify who and what is blocking it. If there are deadlocks (circular dependency of locks), the JVM might report the deadlock automatically. But its best practice to navigate and understand the chains as that will help in resolving and optimizing the behavior for the better.

Let take the following thread stack. The ExecuteThread: '58' is waiting to obtain lock on the

"[STUCK] ExecuteThread: '58' for queue: 'weblogic.kernel.Default (self-tuning)'" id=131807 idx=0x90 tid=927 prio=1 alive, blocked, native_blocked, daemon

-- Blocked trying to get lock: java/util/TreeSet@0x4bba82c0[thin lock]

at jrockit/vm/Locks.monitorEnter(Locks.java:2170)[optimized]

at weblogic/common/resourcepool/ResourcePoolImpl$Group.getCheckRecord(ResourcePoolImpl.java:2369)

at weblogic/common/resourcepool/ResourcePoolImpl$Group.checkHang(ResourcePoolImpl.java:2463)

at weblogic/common/resourcepool/ResourcePoolImpl$Group.access$100(ResourcePoolImpl.java:2210)

...........

at weblogic/common/resourcepool/ResourcePoolImpl.reserveResourceInternal(ResourcePoolImpl.java:450)

at weblogic/common/resourcepool/ResourcePoolImpl.reserveResource(ResourcePoolImpl.java:329)

at weblogic/jdbc/common/internal/ConnectionPool.reserve(ConnectionPool.java:417)

at weblogic/jdbc/common/internal/ConnectionPool.reserve(ConnectionPool.java:324)

at weblogic/jdbc/common/internal/MultiPool.searchLoadBalance(MultiPool.java:312)

at weblogic/jdbc/common/internal/MultiPool.findPool(MultiPool.java:180)

at weblogic/jdbc/common/internal/ConnectionPoolManager.reserve(ConnectionPoolManager.java:89)

Search for the matching lock id among the rest of the threads. The lock is held by ExecuteThread '26' which itself is blocked fororacle/jdbc/driver/T4CConnection@0xf71f6938

...

"[STUCK] ExecuteThread: '26' for queue: 'weblogic.kernel.Default (self-tuning)'" id=1495 idx=0x354 tid=7858 prio=1 alive, blocked, native_blocked, daemon

-- Blocked trying to get lock: oracle/jdbc/driver/T4CConnection@0xf71f6938[thin lock]

at jrockit/vm/Locks.monitorEnter(Locks.java:2170)[optimized]

at oracle/jdbc/driver/OracleStatement.close(OracleStatement.java:1785)

at oracle/jdbc/driver/OracleStatementWrapper.close(OracleStatementWrapper.java:83)

at oracle/jdbc/driver/OraclePreparedStatementWrapper.close(OraclePreparedStatementWrapper.java:80)

at weblogic/jdbc/common/internal/ConnectionEnv.initializeTest(ConnectionEnv.java:940)

at weblogic/jdbc/common/internal/ConnectionEnv.destroyForFlush(ConnectionEnv.java:529)

^-- Holding lock: weblogic/jdbc/common/internal/ConnectionEnv@0xf71f6798[recursive]

at weblogic/jdbc/common/internal/ConnectionEnv.destroy(ConnectionEnv.java:507)

^-- Holding lock: weblogic/jdbc/common/internal/ConnectionEnv@0xf71f6798[biased lock]

at weblogic/common/resourcepool/ResourcePoolImpl.destroyResource(ResourcePoolImpl.java:1802)

at weblogic/common/resourcepool/ResourcePoolImpl.access$500(ResourcePoolImpl.java:41)

at weblogic/common/resourcepool/ResourcePoolImpl$Group.killAllConnectionsBeingTested(ResourcePoolImpl.java:2399)

^-- Holding lock: java/util/TreeSet@0x4bba82c0[thin lock]

at weblogic/common/resourcepool/ResourcePoolImpl$Group.destroyIdleResources(ResourcePoolImpl.java:2267)

^-- Holding lock: weblogic/jdbc/common/internal/GenericConnectionPool@0x5da625a8[thin lock]

at weblogic/common/resourcepool/ResourcePoolImpl$Group.checkHang(ResourcePoolImpl.java:2475)

at weblogic/common/resourcepool/ResourcePoolImpl$Group.access$100(ResourcePoolImpl.java:2210)

at weblogic/common/resourcepool/ResourcePoolImpl.checkResource(ResourcePoolImpl.java:1677)

at weblogic/common/resourcepool/ResourcePoolImpl.checkResource(ResourcePoolImpl.java:1662)

at weblogic/common/resourcepool/ResourcePoolImpl.makeResources(ResourcePoolImpl.java:1268)

at weblogic/common/resourcepool/ResourcePoolImpl.makeResources(ResourcePoolImpl.java:1166)

The oracle/jdbc/driver/T4CConnection@0xf71f6938 lock is held by yet another thread MDSPollingThread-owsm which is attempting to test the health of its jdbc connection (that it reserved from the datasource connection pool) by invoking some basic test on the database. The socket read from the DB appears to the one is either slow or every other thread kept getting the lock except for ExecuteThread 58 & 26 as both appear STUCK in the same position for 10 minutes or longer. Having multiple thread dumps will help confirm if the same threads are stuck in the same position or there was change in ownership of locks and these threads were just so unlucky in getting ownership of the locks.

"MDSPollingThread-[owsm, jdbc/mds/owsm]" id=94 idx=0x150 tid=2993 prio=5 alive, in native, daemon

at jrockit/net/SocketNativeIO.readBytesPinned(Ljava/io/FileDescriptor;[BIII)I(Native Method)

at jrockit/net/SocketNativeIO.socketRead(SocketNativeIO.java:32)

at java/net/SocketInputStream.socketRead0(Ljava/io/FileDescriptor;[BIII)I(SocketInputStream.java)

at java/net/SocketInputStream.read(SocketInputStream.java:129)

at oracle/net/nt/MetricsEnabledInputStream.read(TcpNTAdapter.java:718)

at oracle/net/ns/Packet.receive(Packet.java:295)

at oracle/net/ns/DataPacket.receive(DataPacket.java:106)

at oracle/net/ns/NetInputStream.getNextPacket(NetInputStream.java:317)

at oracle/net/ns/NetInputStream.read(NetInputStream.java:104)

at oracle/jdbc/driver/T4CSocketInputStreamWrapper.readNextPacket(T4CSocketInputStreamWrapper.java:126)

at oracle/jdbc/driver/T4CSocketInputStreamWrapper.read(T4CSocketInputStreamWrapper.java:82)

at oracle/jdbc/driver/T4CMAREngine.unmarshalUB1(T4CMAREngine.java:1177)

at oracle/jdbc/driver/T4CTTIfun.receive(T4CTTIfun.java:312)

at oracle/jdbc/driver/T4CTTIfun.doRPC(T4CTTIfun.java:204)

at oracle/jdbc/driver/T4C8Oall.doOALL(T4C8Oall.java:540)

at oracle/jdbc/driver/T4CPreparedStatement.executeForRows(T4CPreparedStatement.java:1079)

at oracle/jdbc/driver/OracleStatement.doExecuteWithTimeout(OracleStatement.java:1419)

at oracle/jdbc/driver/OraclePreparedStatement.executeInternal(OraclePreparedStatement.java:3752)

at oracle/jdbc/driver/OraclePreparedStatement.execute(OraclePreparedStatement.java:3937)

^-- Holding lock: oracle/jdbc/driver/T4CConnection@0xf71f6938[thin lock]

at oracle/jdbc/driver/OraclePreparedStatementWrapper.execute(OraclePreparedStatementWrapper.java:1535)

at weblogic/jdbc/common/internal/ConnectionEnv.testInternal(ConnectionEnv.java:873)

at weblogic/jdbc/common/internal/ConnectionEnv.test(ConnectionEnv.java:541)

at weblogic/common/resourcepool/ResourcePoolImpl.testResource(ResourcePoolImpl.java:2198)

The underlying cause for the whole hang was due to firewall dropping the socket connections between the server and database and leading to hung socket reads.

If the application uses java Concurrent Locks, it might be harder to identify who is holding a lock as there can be multiple threads waiting for notification on the lock but no obvious holder of lock. Sometimes the JVM itself report the lock chain. If not, enable -XX:+PrintConcurrentLocks in the java command line to get details on Concurrent Locks.

What to observe

The overall health of JVM is based on the interaction of threads, resources, partners/systems/actors along with the OS, GC policy and hardware.

- Ensure the system has been subjected to some good loads and everything has initialized/stabilized before starting the thread capture cycle.

- Ensure no deadlock exists in the JVM. If there are deadlocks, restart is the only option to kill the deadlock. Analyze the locking and try to establish a order of locking or to avoid the locks entirely.

- Verify GC or Memory is not a bottleneck

- Check that Finalizer thread is not blocked. Its possible for the Finalizer thread to be blocked for a lock held by a user thread leading to accumulation in finale objects and more frequent GCs.

- Threads requesting for new memory too frequently. These can be detected in JRockit VM by the threads executing jrockit.vm.Allocator.getNewTLA()

- Use Blocked Lock Chain information if available

- Identified by JVM as dependent chains or blocked threads. Use this to navigate the chain and reduce the locking.

- Search and Identify reason for STUCK threads

- Are they harmless or bad

- Are external systems (Database/services/backends) cause of slowness ??

- Based on hot spots/blocked call patterns.

- Sometimes the health of one server might be really linked to other servers its interacting with, especially in a clustered environment. In those cases, it might require gathering and analyzing thread dumps from multiple servers. For instance in WLS: deployment troubleshooting will require thread dump analysis of Admin Server along with Managed server where the application is getting deployed or Oracle Service Bus (OSB) along with SOA Server instance.

- Parallel capture from all linked pieces simultaneously and analyze.

- Look for excess threads. These can be for GC (a 12 core hyperthreaded box might lead to 24 Parallel GC threads) or WLS can be running with large number of Muxer threads. Try to trim number of threads as these can only lead to excess thread switching and degraded performance.

- Parallel GC threads can be controlled by using the jvm vendor specific flags.

- For WLS, best to have the number of Native Muxer threads to 4. Use -Dweblogic.SocketReaders=4 to tweak the number of Muxer threads.

- Ensure the WLS Server is using the Native Muxer and not the JavaSocketMuxer. Possible reasons might include not setting the LD_LIBRARY_PATH or SHLIB_PATH to include the directory containing the native libraries. Native Muxer delivers best performance compared to Java Socket Muxer as it uses native poll to read sockets that have readily available data instead of doing a blocking read from the socket.

What Next?

- Analyze the dependencies between code and locks

- Might require subject expert (field or customer) who can understand, identify and implement optimizations

- Resolving bottlenecks due to Locking

- Identify reason for locks and call invocations

- Avoid call patterns

- Code change to avoid locks - use finer grained locking instead of method/coarser locking

- Increase resources that are under contention or used in hot spots

- Increase size of connection pools

- Increase memory

- Cache judiciously (remote stubs, resources, handlers...)

- Separate work managers in WLS for dedicated execution threads (use wl-dispatch-policy). Useful if an application always requires dedicated threads to avoid thread starvation or delay in execution

- Avoid extraneous invocations/interruptions - like debug logging, excessive caching, frequent GCs, too many exceptions to handle logic

- Repeat the cycle - capture, analyze, optimize and fine tune ...

Summary

This section of the series discussed how to analyze thread dumps and navigate lock chains as well as some tips and pointers on what to look for and optimize. The next section will go into tools that can help automate thread dump analysis, some real world examples and limitations of thread dumps.

Analyzing Thread Dumps in Middleware - Part 1

This section details with basics of thread states and locking in general. Subsequent sections will deal with capturing thread dumps and analysis with particular emphasis on WebLogic Application Server Thread dumps.

Thread Dumps

A Thread Dump is a brief snapshot in textual format of threads within a Java Virtual Machine (JVM). This is equivalent to process dump in the native world. Data about each thread including the name of the thread, priority, thread group, state (running/blocked/waiting/parking) as well as the execution stack in form of thread stack trace is included in the thread dump. All threads - the Java VM threads (GC threads/scavengers/monitors/others) as well as application and server threads are all included in the dump. Newer versions of JVMs also report blocked thread chains (like ThreadA is locked for a resource held by ThreadB) as well as deadlocks (circular dependency among threads for locks).

Different JVM Vendors display the data in different formats (markers for start/end of thread dumps, reporting of locks and thread states, method signatures) but the underlying data exposed by the thread dumps remains the same across vendors.

Sample of a JRockit Thread Dump:

===== FULL THREAD DUMP =============== Mon Feb 06 11:38:58 2012 Oracle JRockit(R) R28.0.0-679-130297-1.6.0_17-20100312-2123-windows-ia32 "Main Thread" id=1 idx=0x4 tid=4184 prio=5 alive, in native at java/net/PlainSocketImpl.socketConnect(Ljava/net/InetAddress;II)V(Native Method) at java/net/PlainSocketImpl.doConnect(PlainSocketImpl.java:333) ^-- Holding lock: java/net/SocksSocketImpl@0x10204E50[biased lock] at java/net/PlainSocketImpl.connectToAddress(PlainSocketImpl.java:195) at java/net/PlainSocketImpl.connect(PlainSocketImpl.java:182) at java/net/SocksSocketImpl.connect(SocksSocketImpl.java:366) at java/net/Socket.connect(Socket.java:525) at java/net/Socket.connect(Socket.java:475) at sun/net/NetworkClient.doConnect(NetworkClient.java:163) at sun/net/www/http/HttpClient.openServer(HttpClient.java:394) at sun/net/www/http/HttpClient.openServer(HttpClient.java:529) ^-- Holding lock: sun/net/www/http/HttpClient@0x10203FB8[biased lock] at sun/net/www/http/HttpClient.<init>(HttpClient.java:233) at sun/net/www/http/HttpClient.New(HttpClient.java:306) at sun/net/www/http/HttpClient.New(HttpClient.java:323) at sun/net/www/protocol/http/HttpURLConnection.getNewHttpClient(HttpURLConnection.java:860) at sun/net/www/protocol/http/HttpURLConnection.plainConnect(HttpURLConnection.java:801) at sun/net/www/protocol/http/HttpURLConnection.connect(HttpURLConnection.java:726) at sun/net/www/protocol/http/HttpURLConnection.getOutputStream(HttpURLConnection.java:904) ^-- Holding lock: sun/net/www/protocol/http/HttpURLConnection@0x101FAD88[biased lock] at post.main(post.java:29) at jrockit/vm/RNI.c2java(IIIII)V(Native Method) -- end of trace "(Signal Handler)" id=2 idx=0x8 tid=4668 prio=5 alive, daemon "(OC Main Thread)" id=3 idx=0xc tid=6332 prio=5 alive, native_waiting, daemon "(GC Worker Thread 1)" id=? idx=0x10 tid=1484 prio=5 alive, daemon "(GC Worker Thread 2)" id=? idx=0x14 tid=5548 prio=5 alive, daemon "(Code Generation Thread 1)" id=4 idx=0x30 tid=8016 prio=5 alive, native_waiting, daemon "(Code Optimization Thread 1)" id=5 idx=0x34 tid=3596 prio=5 alive, native_waiting, daemon "(VM Periodic Task)" id=6 idx=0x38 tid=1352 prio=10 alive, native_blocked, daemon "(Attach Listener)" id=7 idx=0x3c tid=6592 prio=5 alive, native_blocked, daemon "Finalizer" id=8 idx=0x40 tid=1576 prio=8 alive, native_waiting, daemon at jrockit/memory/Finalizer.waitForFinalizees(J[Ljava/lang/Object;)I(Native Method) at jrockit/memory/Finalizer.access$700(Finalizer.java:12) at jrockit/memory/Finalizer$4.run(Finalizer.java:183) at java/lang/Thread.run(Thread.java:619) at jrockit/vm/RNI.c2java(IIIII)V(Native Method) -- end of trace "Reference Handler" id=9 idx=0x44 tid=3012 prio=10 alive, native_waiting, daemon at java/lang/ref/Reference.waitForActivatedQueue(J)Ljava/lang/ref/Reference;(Native Method) at java/lang/ref/Reference.access$100(Reference.java:11) at java/lang/ref/Reference$ReferenceHandler.run(Reference.java:82) at jrockit/vm/RNI.c2java(IIIII)V(Native Method) -- end of trace "(Sensor Event Thread)" id=10 idx=0x48 tid=980 prio=5 alive, native_blocked, daemon "VM JFR Buffer Thread" id=11 idx=0x4c tid=6072 prio=5 alive, in native, daemon ===== END OF THREAD DUMP ===============

Sample of a Sun Hotspot Thread Dump (executing same code as above)

2012-02-06 11:37:30 Full thread dump Java HotSpot(TM) Client VM (16.0-b13 mixed mode): "Low Memory Detector" daemon prio=6 tid=0x0264bc00 nid=0x520 runnable [0x00000000] java.lang.Thread.State: RUNNABLE "CompilerThread0" daemon prio=10 tid=0x02647400 nid=0x1ae8 waiting on condition [0x00000000] java.lang.Thread.State: RUNNABLE "Attach Listener" daemon prio=10 tid=0x02645800 nid=0x1480 runnable [0x00000000] java.lang.Thread.State: RUNNABLE "Signal Dispatcher" daemon prio=10 tid=0x02642800 nid=0x644 waiting on condition [0x00000000] java.lang.Thread.State: RUNNABLE "Finalizer" daemon prio=8 tid=0x02614800 nid=0x1e70 in Object.wait() [0x1882f000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x04660b18> (a java.lang.ref.ReferenceQueue$Lock) at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:118) - locked <0x04660b18> (a java.lang.ref.ReferenceQueue$Lock) at java.lang.ref.ReferenceQueue.remove(ReferenceQueue.java:134) at java.lang.ref.Finalizer$FinalizerThread.run(Finalizer.java:159) "Reference Handler" daemon prio=10 tid=0x02610000 nid=0x1b84 in Object.wait() [0x1879f000] java.lang.Thread.State: WAITING (on object monitor) at java.lang.Object.wait(Native Method) - waiting on <0x04660a20> (a java.lang.ref.Reference$Lock) at java.lang.Object.wait(Object.java:485) at java.lang.ref.Reference$ReferenceHandler.run(Reference.java:116) - locked <0x04660a20> (a java.lang.ref.Reference$Lock) "main" prio=6 tid=0x00ec9400 nid=0x19e4 runnable [0x0024f000] java.lang.Thread.State: RUNNABLE at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.PlainSocketImpl.doConnect(PlainSocketImpl.java:333) - locked <0x04642958> (a java.net.SocksSocketImpl) at java.net.PlainSocketImpl.connectToAddress(PlainSocketImpl.java:195) at java.net.PlainSocketImpl.connect(PlainSocketImpl.java:182) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:366) at java.net.Socket.connect(Socket.java:525) at java.net.Socket.connect(Socket.java:475) at sun.net.NetworkClient.doConnect(NetworkClient.java:163) at sun.net.www.http.HttpClient.openServer(HttpClient.java:394) at sun.net.www.http.HttpClient.openServer(HttpClient.java:529) - locked <0x04642058> (a sun.net.www.http.HttpClient) at sun.net.www.http.HttpClient.<init>(HttpClient.java:233) at sun.net.www.http.HttpClient.New(HttpClient.java:306) at sun.net.www.http.HttpClient.New(HttpClient.java:323) at sun.net.www.protocol.http.HttpURLConnection.getNewHttpClient(HttpURLConnection.java:860) at sun.net.www.protocol.http.HttpURLConnection.plainConnect(HttpURLConnection.java:801) at sun.net.www.protocol.http.HttpURLConnection.connect(HttpURLConnection.java:726) at sun.net.www.protocol.http.HttpURLConnection.getOutputStream(HttpURLConnection.java:904) - locked <0x04639dd0> (a sun.net.www.protocol.http.HttpURLConnection) "VM Thread" prio=10 tid=0x0260d000 nid=0x4dc runnable "VM Periodic Task Thread" prio=10 tid=0x02656000 nid=0x16b8 waiting on condition JNI global references: 667 Heap def new generation total 4928K, used 281K [0x04660000, 0x04bb0000, 0x09bb0000) eden space 4416K, 6% used [0x04660000, 0x046a6460, 0x04ab0000) from space 512K, 0% used [0x04ab0000, 0x04ab0000, 0x04b30000) to space 512K, 0% used [0x04b30000, 0x04b30000, 0x04bb0000) tenured generation total 10944K, used 0K [0x09bb0000, 0x0a660000, 0x14660000) the space 10944K, 0% used [0x09bb0000, 0x09bb0000, 0x09bb0200, 0x0a660000) compacting perm gen total 12288K, used 1704K [0x14660000, 0x15260000, 0x18660000) the space 12288K, 13% used [0x14660000, 0x1480a290, 0x1480a400, 0x15260000) No shared spaces configured.

Sample of an IBM Thread dump

NULL ------------------------------------------------------------------------ 0SECTION THREADS subcomponent dump routine NULL ================================= NULL 1XMCURTHDINFO Current Thread Details NULL ---------------------- NULL 1XMTHDINFO All Thread Details NULL ------------------ NULL 2XMFULLTHDDUMP Full thread dump J9 VM (J2RE 6.0 IBM J9 2.4 Windows Vista x86-32 build jvmwi3260sr4ifx-20090506_3499120090506_034991_lHdSMr, native threads): 3XMTHREADINFO "main" TID:0x00554B00, j9thread_t:0x00783AE4, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x1E48, native priority:0x5, native policy:UNKNOWN) 4XESTACKTRACE at com/ibm/oti/vm/BootstrapClassLoader.loadClass(BootstrapClassLoader.java:65) 4XESTACKTRACE at sun/net/NetworkClient.isASCIISuperset(NetworkClient.java:122) 4XESTACKTRACE at sun/net/NetworkClient.<clinit>(NetworkClient.java:83) 4XESTACKTRACE at java/lang/J9VMInternals.initializeImpl(Native Method) 4XESTACKTRACE at java/lang/J9VMInternals.initialize(J9VMInternals.java:200(Compiled Code)) 4XESTACKTRACE at java/lang/J9VMInternals.initialize(J9VMInternals.java:167(Compiled Code)) 4XESTACKTRACE at sun/net/www/protocol/http/HttpURLConnection.getNewHttpClient(HttpURLConnection.java:783) 4XESTACKTRACE at sun/net/www/protocol/http/HttpURLConnection.plainConnect(HttpURLConnection.java:724) 4XESTACKTRACE at sun/net/www/protocol/http/HttpURLConnection.connect(HttpURLConnection.java:649) 4XESTACKTRACE at sun/net/www/protocol/http/HttpURLConnection.getOutputStream(HttpURLConnection.java:827) 4XESTACKTRACE at post.main(post.java:29) 3XMTHREADINFO "JIT Compilation Thread" TID:0x00555000, j9thread_t:0x00783D48, state:CW, prio=10 3XMTHREADINFO1 (native thread ID:0x111C, native priority:0xB, native policy:UNKNOWN) 3XMTHREADINFO "Signal Dispatcher" TID:0x6B693300, j9thread_t:0x00784210, state:R, prio=5 3XMTHREADINFO1 (native thread ID:0x1E34, native priority:0x5, native policy:UNKNOWN) 4XESTACKTRACE at com/ibm/misc/SignalDispatcher.waitForSignal(Native Method) 4XESTACKTRACE at com/ibm/misc/SignalDispatcher.run(SignalDispatcher.java:54) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B693800, j9thread_t:0x0078EABC, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x1AA4, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B695500, j9thread_t:0x0078ED20, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x14F8, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B695A00, j9thread_t:0x0078EF84, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x9E0, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B698800, j9thread_t:0x0078F1E8, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x1FB8, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B698D00, j9thread_t:0x0078F44C, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x1A58, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B69BB00, j9thread_t:0x0078F6B0, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0x1430, native priority:0x5, native policy:UNKNOWN) 3XMTHREADINFO "Gc Slave Thread" TID:0x6B69C000, j9thread_t:0x029D8FE4, state:CW, prio=5 3XMTHREADINFO1 (native thread ID:0xBC4, native priority:0x5, native policy:UNKNOWN) NULL ------------------------------------------------------------------------

- New

- Runnable

- Non-Runnable

- Sleep for a time duration

- Wait for a condition/event

- Blocked for a lock

- Dead